NVIDIA Introduces Omniverse NuRec Libraries, Cosmos Models, and RTX PRO Blackwell Servers to Accelerate Robotics and Physical AI

New simulation, world-generation, and reasoning tools—backed by powerful AI infrastructure—enable developers to build, train, and deploy next-generation robots with unprecedented speed, accuracy, and realism.

Image Courtesy: Public Domain

- New NVIDIA Omniverse NuRec 3D Gaussian Splatting Libraries Enable Large-Scale World Reconstruction

- New NVIDIA Cosmos Models Enable World Generation and Spatial Reasoning

- New NVIDIA RTX PRO Blackwell Servers and NVIDIA DGX Cloud Let Developers Run the Most Demanding Simulations Anywhere

- Physical AI Leaders Amazon Devices & Services, Boston Dynamics, Figure AI and Hexagon Embrace Simulation and Synthetic Data Generation

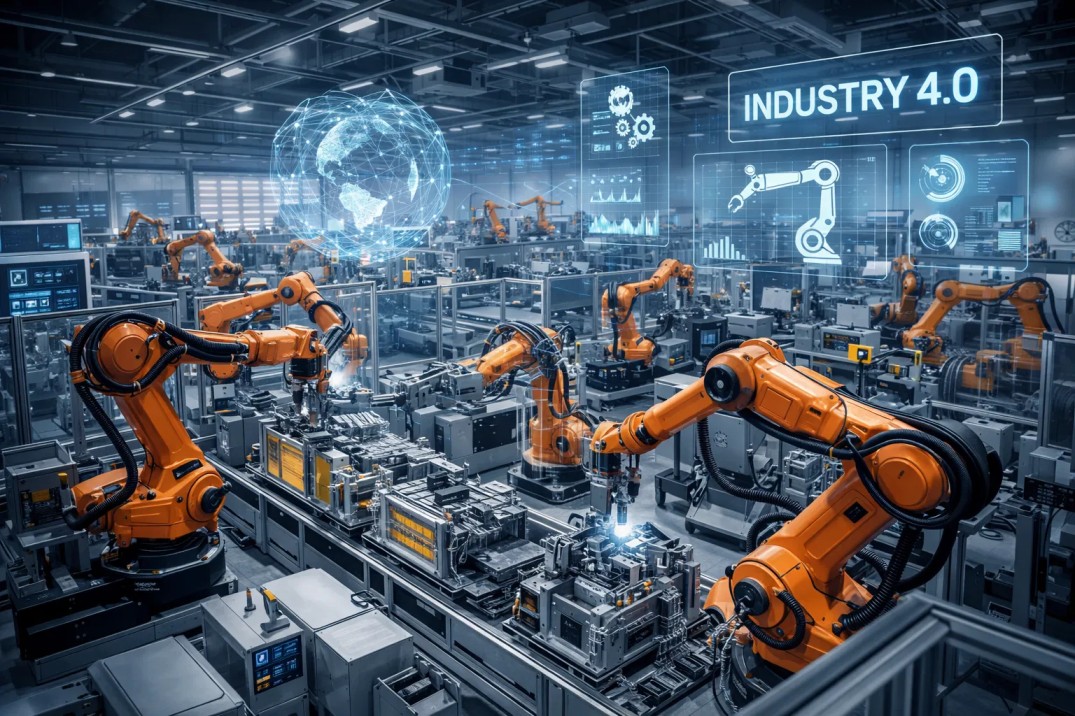

NVIDIA announced new NVIDIA Omniverse™ libraries and NVIDIA Cosmos™ world foundation models (WFMs) that accelerate the development and deployment of robotics solutions.

Powered by new NVIDIA RTX PRO™ Servers and NVIDIA DGX™ Cloud, the libraries and models let developers anywhere develop physically accurate digital twins, capture and reconstruct the real world in simulation, generate synthetic data for training physical AI models and build AI agents that understand the physical world.

“Computer graphics and AI are converging to fundamentally transform robotics,” said Rev Lebaredian, vice president of Omniverse and simulation technologies at NVIDIA. “By combining AI reasoning with scalable, physically accurate simulation, we’re enabling developers to build tomorrow’s robots and autonomous vehicles that will transform trillions of dollars in industries.”

New NVIDIA Omniverse Libraries Advance Applications for World Composition

New NVIDIA Omniverse software development kits (SDKs) and libraries are now available for building and deploying industrial AI and robotics simulation applications.

Omniverse NuRec rendering is now integrated in CARLA, a leading open-source simulator used by over 150,000 developers. Autonomous vehicle (AV) toolchain leader Foretellix is integrating NuRec, NVIDIA Omniverse Sensor RTX™ and Cosmos Transfer to enhance its scalable synthetic data generation with physically accurate scenarios. Voxel51’s data engine for visual and multimodal AI, FiftyOne, supports NuRec to ease data preparation for reconstructions. FiftyOne is used by customers such as Ford and Porsche.

Boston Dynamics, Figure AI, Hexagon, RAI Institute, Lightwheel and Skild AI are adopting Omniverse libraries, Isaac Sim and Isaac Lab to accelerate their AI robotics development, while Amazon Devices & Services is using them to power a new manufacturing solution.

Cosmos Advances World Generation for Robotics

Cosmos WFMs, downloaded over 2 million times, let developers generate diverse data for training robots at scale using text, image and video prompts.

New models announced at SIGGRAPH deliver major advances in synthetic data generation speed, accuracy, language support and control:

- Cosmos Transfer-2, coming soon, simplifies prompting and accelerates photorealistic synthetic data generation from ground-truth 3D simulation scenes or spatial control inputs like depth, segmentation, edges and high-definition maps.

- A distilled version of Cosmos Transfer reduces the 70-step distillation process to one so developers can run the model on NVIDIA RTX PRO Servers at unprecedented speed.

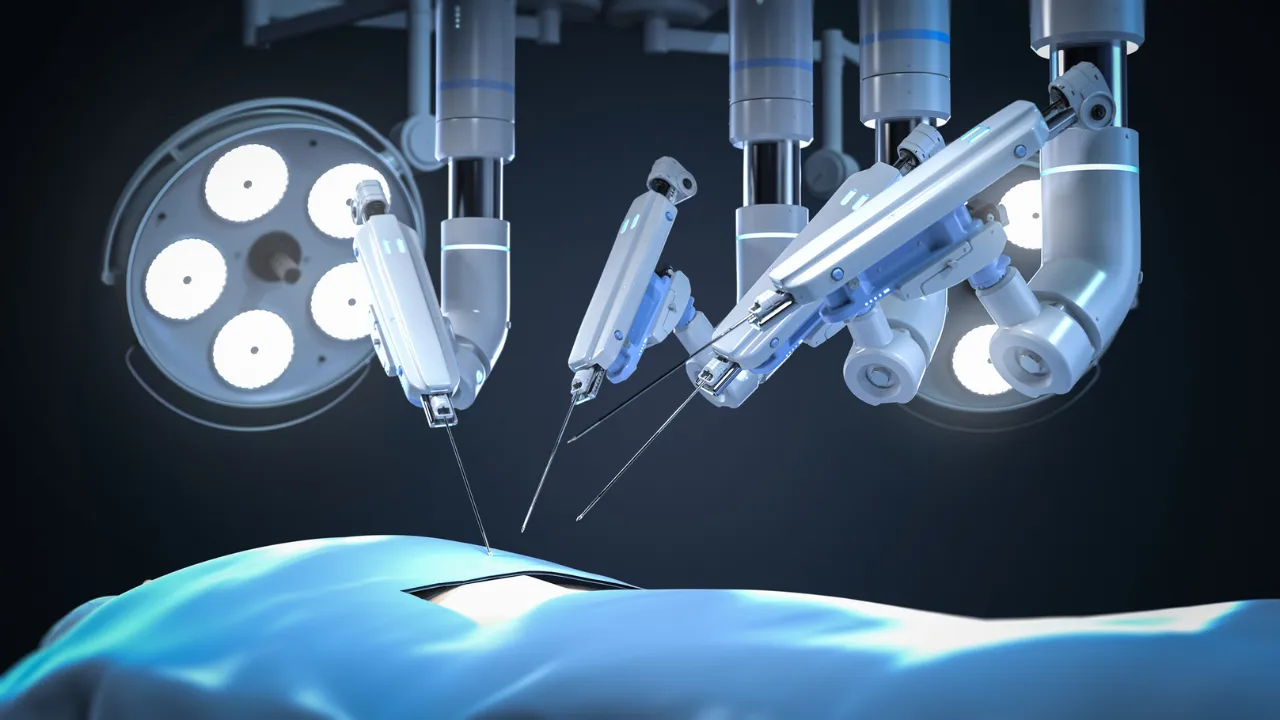

Lightwheel, Moon Surgical and Skild AI are using Cosmos Transfer to accelerate physical AI training by simulating diverse conditions at scale.

Cosmos Reason Breaks Through World Understanding

Since the introduction of OpenAI’s CLIP model, vision language models (VLMs) have transformed computer-vision tasks like object and pattern recognition. However, they have not yet been able to solve multistep tasks nor handle ambiguity or novel experiences.

NVIDIA Cosmos Reason — a new open, customizable, 7-billion-parameter reasoning VLM for physical AI and robotics — lets robots and vision AI agents reason like humans, using prior knowledge, physics understanding and common sense to understand and act in the real world.

Cosmos Reason can be used for robotics and physical AI applications including:

NVIDIA’s robotics and NVIDIA DRIVE™ teams are using Cosmos Reason for data curation and filtering, annotation and VLA post-training. Uber is using it to annotate and caption AV training data.

Magna is developing with Cosmos Reason as part of its City Delivery platform — a fully autonomous, low-cost solution for instant delivery — to help vehicles adapt more quickly to new cities. Cosmos Reason adds world understanding to the vehicles’ long-term trajectory planner. VAST Data, Milestone Systems and Linker Vision are adopting Cosmos Reason to automate traffic monitoring, improve safety and enhance visual inspection in cities and industrial settings.

New NVIDIA AI Infrastructure Powers Robotics Workloads Anywhere

To enable developers to take full advantage of these advanced technologies and software libraries, NVIDIA announced AI infrastructure designed for the most demanding workloads.

Accelerating the Developer Ecosystem

To help robotics and physical AI developers advance 3D and simulation technology adoption, NVIDIA also announced:

- OpenUSD Curriculum and Certification, which addresses demand for USD expertise, with support from AOUSD members Adobe, Amazon Robotics, Ansys — part of Synopsys, Autodesk, Pixar, PTC, Rockwell Automation, SideFX, Siemens, TCS and Trimble, as well as industry leaders such as Hexagon.

- Open-source collaboration with Lightwheel to integrate robot policy training and evaluation frameworks into NVIDIA Isaac Lab, featuring parallel reinforcement learning training capabilities, benchmarks and simulation-ready assets for robot manipulation and locomotion.